Is TensorZero Production Ready? Deep Dive & Setup Guide

Technical analysis of TensorZero. Architecture review, deployment guide, and production-readiness verdict. 10.7k stars.

TensorZero is trending with 10.7k stars. It represents a shift in AI engineering from “prompt engineering in notebooks” to “optimizing inference as a system.” Here is the architectural breakdown.

🛠️ What is it?

TensorZero is an open-source LLM Gateway and Experimentation Platform written in Rust. Unlike standard gateways (like LiteLLM) that primarily handle routing and auth, TensorZero is opinionated about data-driven optimization.

It sits between your application and the Model Providers (OpenAI, Anthropic, etc.), turning raw LLM calls into “Inference Functions.” This allows you to decouple your application logic from specific models and prompts, enabling real-time experimentation, A/B testing, and feedback collection without deploying new application code.

Key Architectural Components

-

The Gateway (Rust):

- Located in

gateway/, this is the high-performance core. It handles request routing, rate limiting, and the execution of “Inference Functions.” - It uses a

tensorzero.tomlconfiguration to define functions (e.g.,generate_summary) and their variants (e.g.,gpt-4o-minivsclaude-3-haiku). - It abstracts the provider APIs, normalizing inputs and outputs so your app code remains stable even if the underlying model changes.

- Located in

-

The Data Warehouse (ClickHouse):

- TensorZero relies heavily on ClickHouse (visible in

gateway/src/db/clickhouse) for storing high-volume inference traces and feedback data. - This design choice indicates a focus on scale. Unlike Postgres-based logging which chokes at high throughput, ClickHouse allows TensorZero to perform complex analytics and optimization queries on millions of inference rows.

- TensorZero relies heavily on ClickHouse (visible in

-

The Optimization Engine:

- The

tensorzero-optimizerscrate reveals sophisticated strategies like DICL (Dynamic In-Context Learning) and GEPA (Genetic Evolutionary Prompt Optimization). - The system can automatically select the best “variant” (prompt + model combo) based on real-time feedback scores (e.g., user thumbs up/down) or programmatic graders.

- The

-

The Control Plane (UI):

- A React/Remix application (

ui/) that provides observability into traces, dataset management, and configuration of experiments.

- A React/Remix application (

🚀 Quick Start

TensorZero is best run via Docker Compose due to its dependency on ClickHouse and Postgres.

1. Setup & Run

# Clone the repository

git clone https://github.com/tensorzero/tensorzero

cd tensorzero

# Start the Gateway, UI, ClickHouse, and Postgres

docker compose up -d2. Define a Function

Create a tensorzero.toml file (or edit the default one mapped in docker-compose) to define a function.

# config/tensorzero.toml

[functions.weather_joke]

system_schema = { type = "string" }

user_schema = { type = "object", properties = { city = { type = "string" } } }

[functions.weather_joke.variants.gpt_joke]

type = "chat_completion"

model = "gpt-4o-mini"

system_template = "You are a funny weatherman."

user_template = "Tell me a joke about the weather in {{ city }}."3. Invoke via API

Instead of calling OpenAI directly, you call TensorZero. Note that you call the function name (weather_joke), not a specific model.

curl -X POST http://localhost:3000/inference \

-H "Content-Type: application/json" \

-d '{

"function_name": "weather_joke",

"input": {

"city": "Seattle"

}

}'4. Send Feedback

To close the loop, send feedback on the result to help TensorZero optimize future calls.

# Use the inference_id returned from the previous call

curl -X POST http://localhost:3000/feedback \

-H "Content-Type: application/json" \

-d '{

"inference_id": "UUID_FROM_PREVIOUS_RESPONSE",

"metric_name": "user_rating",

"value": 1.0

}'⚖️ The Verdict

Production Readiness: ⭐⭐⭐⭐ (4/5)

TensorZero is a robust piece of engineering. The choice of Rust for the data plane and ClickHouse for the storage layer proves the team understands the latency and throughput requirements of production LLM applications.

Pros:

- Performance: Rust + ClickHouse is a “Ferrari” stack for this use case.

- Abstraction: Decoupling “Business Logic” from “Prompt Logic” is the correct way to build GenAI apps at scale.

- Closed Loop: The built-in feedback and optimization mechanisms (DICL, GEPA) are features usually found only in expensive enterprise platforms.

Cons:

- Infrastructure Weight: Running ClickHouse and Postgres adds operational complexity. It is overkill for a simple side project.

- Learning Curve: You must adopt their mental model of “Functions” and “Variants” rather than just sending raw strings to an API.

Recommendation: If you are an AI Engineering team building a product where quality and cost optimization are critical, TensorZero is an excellent, self-hostable choice that prevents vendor lock-in. If you just need to prototype a chatbot, it might be too heavy.

Recommended Reads

Is YuPi AI Guide Production Ready? Deep Dive & Setup Guide

Technical analysis of YuPi AI Guide. Architecture review, deployment guide, and production-readiness verdict. 2.7k stars.

Is Deepnote Production Ready? Deep Dive & Setup Guide

Technical analysis of Deepnote's open-source ecosystem. Architecture review of the reactivity engine, file format, and conversion tools. 2.5k stars.

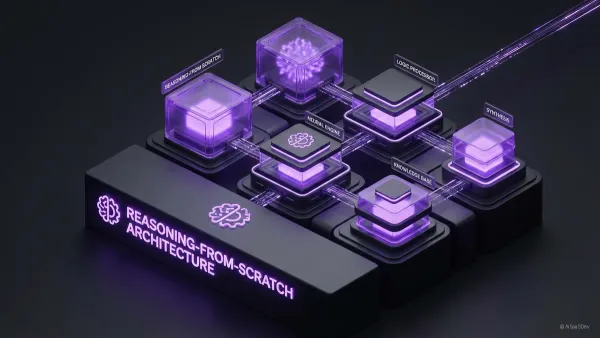

Is Reasoning From Scratch Production Ready? Deep Dive & Setup Guide

Technical analysis of Reasoning From Scratch. Architecture review, deployment guide, and production-readiness verdict. 2.4k stars.