Is Perplexica Production Ready? Deep Dive & Setup Guide

Technical analysis of Perplexica. Architecture review, deployment guide, and production-readiness verdict. 27767 stars.

Is Perplexica Production Ready?

Perplexica is trending with 27,767 stars because it addresses the “Black Box” problem of current AI search engines. While Perplexity AI and Google Gemini are powerful, they are closed ecosystems with privacy trade-offs.

Perplexica is an open-source, privacy-first alternative that decouples the Reasoning Engine (LLM) from the Information Retrieval Engine (Search). It allows engineers to self-host a research assistant that uses local models (via Ollama) or proprietary APIs (OpenAI/Anthropic), while routing actual web queries through a private metasearch instance.

🛠️ Architecture Deep Dive

Perplexica is built as a monolithic TypeScript application (Next.js) that orchestrates a RAG (Retrieval-Augmented Generation) pipeline.

The Stack

- Frontend/Backend: Next.js (React/Node.js). Handles the UI, state management, and API routes.

- Search Layer: SearxNG. This is the critical component. SearxNG is a metasearch engine that aggregates results from Google, Bing, DuckDuckGo, and Reddit without passing user IP or tracking data to the source.

- Inference: Agnostic. Supports Ollama (local Llama 3/Mistral), OpenAI, Groq, and Anthropic.

- Vector/Storage: Uses a local file-based system for history and context, minimizing database overhead.

The Request Lifecycle

- Query Analysis: The user’s prompt is analyzed by the “Focus Mode” logic (e.g., if “YouTube” mode is selected, the search scope is restricted).

- Query Transformation: The LLM rephrases the natural language query into optimized search strings compatible with search engines.

- Retrieval (SearxNG): The backend hits the local SearxNG instance. SearxNG scrapes the results and returns raw text/metadata.

- Context Injection: The retrieved content is cleaned and injected into the LLM’s context window.

- Citation Generation: The LLM generates an answer, strictly instructed to reference the injected sources, providing clickable citations similar to Perplexity.

🚀 Quick Start

The only viable way to run this for a stable environment is Docker. The codebase relies on the interaction between the application container and the SearxNG container.

Prerequisites

- Docker & Docker Compose

- (Optional) Ollama running on the host machine for local inference.

Deployment

-

Clone and Setup Do not run

npm startunless you are developing. Use the pre-built images.git clone https://github.com/ItzCrazyKns/Perplexica.git cd Perplexica -

Configuration (config.toml) Ensure you create a

config.tomlif one isn’t auto-generated, though the UI setup is preferred in v1.x. The critical step is mapping the volumes correctly. -

Run with Docker This command spins up Perplexica and the bundled SearxNG instance.

# Create persistent volumes first docker volume create perplexica-data docker volume create perplexica-uploads # Run the container docker run -d \ -p 3000:3000 \ --name perplexica \ -v perplexica-data:/home/perplexica/data \ -v perplexica-uploads:/home/perplexica/uploads \ itzcrazykns1337/perplexica:latest -

Access & Connect LLM

- Navigate to

http://localhost:3000. - Go to Settings.

- If using Ollama (Linux): Set host to

http://172.17.0.1:11434(Docker host bridge IP). - If using Ollama (Mac/Windows): Set host to

http://host.docker.internal:11434.

- Navigate to

⚖️ The Verdict

Production Status: Prosumer / Internal Tooling

Perplexica is an impressive piece of engineering, but it is not yet “Enterprise Production” ready for high-concurrency public facing deployments.

Strengths

- Privacy: Data never leaves your network if you use Ollama.

- Modularity: Swapping the backend model from GPT-4o to Llama-3-70b takes seconds.

- SearxNG Integration: This is the killer feature. It solves the “how do I search the web with an LLM” problem without paying for expensive Bing Search APIs.

Weaknesses

- SearxNG Rate Limits: If you deploy this for a team of 50 people, the underlying SearxNG instance will likely get CAPTCHA-blocked by Google/Bing. You will need to configure rotating proxies within SearxNG.

- Single Point of Failure: The current architecture is monolithic.

Recommendation: Deploy this for your personal “Home Lab” or a small internal engineering team to reduce API costs and prevent data leakage. Do not expose it to the public internet without an auth layer (like Authelia) in front of it.

Recommended Reads

Is YuPi AI Guide Production Ready? Deep Dive & Setup Guide

Technical analysis of YuPi AI Guide. Architecture review, deployment guide, and production-readiness verdict. 2.7k stars.

Is Deepnote Production Ready? Deep Dive & Setup Guide

Technical analysis of Deepnote's open-source ecosystem. Architecture review of the reactivity engine, file format, and conversion tools. 2.5k stars.

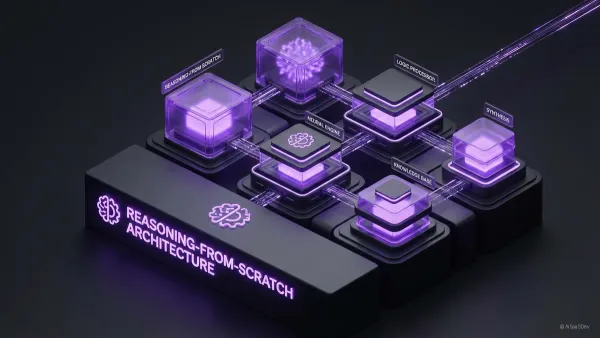

Is Reasoning From Scratch Production Ready? Deep Dive & Setup Guide

Technical analysis of Reasoning From Scratch. Architecture review, deployment guide, and production-readiness verdict. 2.4k stars.