Is OpenMemory Production Ready? Deep Dive & Setup Guide

Technical analysis of OpenMemory. Architecture review, deployment guide, and production-readiness verdict. 2.7k stars.

OpenMemory is trending with 2.7k stars. Here is the architectural breakdown.

🛠️ What is it?

OpenMemory is a self-hosted “cognitive memory engine” designed to solve the amnesia problem in AI agents. Unlike standard RAG (Retrieval-Augmented Generation) which simply fetches relevant text chunks, OpenMemory mimics biological memory processes. It classifies information into five distinct Cognitive Sectors (Episodic, Semantic, Procedural, Emotional, Reflective), each with its own decay rate and retrieval weight.

It runs locally or on your server, utilizing a Hierarchical Semantic Graph (HSG) that combines vector embeddings with graph-based “waypoints.” This allows agents to not just recall facts, but to follow associative paths-remembering context A because it is linked to context B, even if they don’t share keywords.

🏗️ Architecture Deep Dive

The system is built primarily in TypeScript (Node.js) with a Python SDK available. It operates as a standalone service or embedded library.

1. The Core Engine (packages/openmemory-js)

The heart of the system is the HSG (Hierarchical Semantic Graph), which orchestrates storage and retrieval.

- Storage Layer: Supports SQLite for zero-config local use and PostgreSQL for production scale.

- Vector Store: Pluggable backend supporting pgvector (Postgres), Valkey (Redis fork), or a simple blob-based store for SQLite.

- Dynamics System: Implements “Spreading Activation” logic (

dynamics.ts). When a memory is recalled, it “energizes” connected nodes in the graph, making related memories easier to retrieve in the same session.

2. Cognitive Sectors & Decay

The engine automatically classifies input into sectors using regex patterns and LLM analysis:

- Episodic: Time-bound events (Medium decay).

- Semantic: Timeless facts (Very low decay).

- Procedural: Skills/How-tos (Low decay).

- Emotional: Sentiment/Mood (High decay).

- Reflective: Meta-cognition/Insights (Very low decay).

A background Decay Process (decay.ts) runs periodically, reducing the “salience” (importance) of memories over time unless they are reinforced by access or reflection.

3. Reflection & Consolidation

A background job (reflect.ts) clusters related memories to generate higher-level insights. For example, if an agent sees “User likes Python” and “User likes Django” separately, the reflection process consolidates this into a Semantic memory: “User is a Python web developer.”

4. Integration Layer

- MCP Server: Built-in support for the Model Context Protocol, allowing direct integration with AI IDEs like Cursor, Windsurf, and Claude Desktop.

- Connectors: Ingestion pipelines for GitHub, Notion, Google Drive, and Web Crawling.

🚀 Quick Start

OpenMemory can be used as a library in your Node.js project or run as a standalone server.

Option 1: As a Library (Node.js)

npm install openmemory-jsimport { Memory } from 'openmemory-js';

// Initialize (uses SQLite by default)

const mem = new Memory("user-1");

// 1. Add a memory (automatically classified)

await mem.add("I prefer using TypeScript for backend projects.", {

tags: ["coding", "preferences"]

});

// 2. Search with cognitive context

const results = await mem.search("What language should I use?", {

sectors: ["semantic", "episodic"], // Focus on facts and past events

limit: 1

});

console.log(results[0].content);

// Output: "I prefer using TypeScript for backend projects."Option 2: As an MCP Server

To give your AI editor (Cursor/Claude) access to this memory:

npx openmemory-js serveThen add this to your claude_desktop_config.json:

{

"mcpServers": {

"openmemory": {

"command": "npx",

"args": ["openmemory-js", "serve"]

}

}

}⚖️ The Verdict

OpenMemory represents a significant leap from “dumb” vector stores to “smart” agent memory.

- Strengths: The Cognitive Sectors and Decay/Reflection loops provide a much more human-like recall experience than standard RAG. The MCP integration makes it immediately useful for developers using AI coding assistants.

- Production Readiness: High for Vertical Scale. The architecture is solid for single-tenant or vertical-scaling applications (e.g., personal agents, team-internal tools). The SQLite default makes it incredibly easy to deploy.

- Caution: For massive multi-tenant SaaS, the background processes (reflection/decay) would need careful orchestration to avoid resource contention on the database.

Rating: Production Ready (Tier 1 for Local/Internal Tools). A must-have for building stateful agents.

Recommended Reads

Is YuPi AI Guide Production Ready? Deep Dive & Setup Guide

Technical analysis of YuPi AI Guide. Architecture review, deployment guide, and production-readiness verdict. 2.7k stars.

Is Deepnote Production Ready? Deep Dive & Setup Guide

Technical analysis of Deepnote's open-source ecosystem. Architecture review of the reactivity engine, file format, and conversion tools. 2.5k stars.

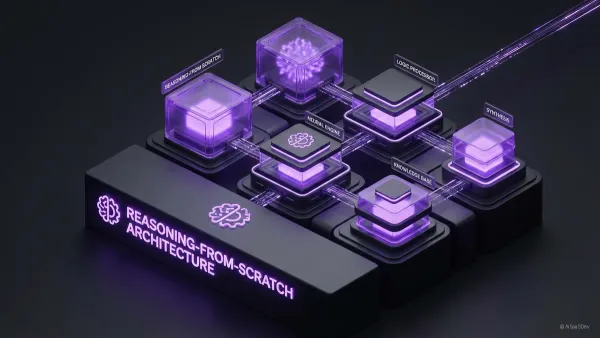

Is Reasoning From Scratch Production Ready? Deep Dive & Setup Guide

Technical analysis of Reasoning From Scratch. Architecture review, deployment guide, and production-readiness verdict. 2.4k stars.