Is Ollama Production Ready? Deep Dive & Setup Guide

Technical analysis of ollama. Architecture review, deployment guide, and production-readiness verdict for CTOs and Founders.

Ollama is trending with 158.0k stars. But is it ready for production? Here’s the architectural breakdown.

🛠️ What is Ollama?

Ollama has effectively positioned itself as the “Docker for LLMs.” In the fragmented landscape of local Large Language Model (LLM) inference, where developers previously wrestled with Python virtual environments, C++ compilation flags for llama.cpp, and manual weight management, Ollama offers a unified runtime.

Its core value proposition is the abstraction of hardware acceleration and model management. Whether you are running on Apple Silicon (Metal), NVIDIA GPUs (CUDA), or AMD (ROCm), Ollama detects the hardware capabilities and configures the inference engine automatically. It packages the model weights, configuration, and prompt templates into a single manageable unit, accessible via a standardized CLI or REST API.

Why is it gaining such massive traction? It solves the “deployment friction” problem. Before Ollama, sharing a specific configuration of a model (e.g., Llama 3 with a specific system prompt and temperature setting) required sharing a repo of code and a separate weights file. With Ollama, this is encapsulated in a Modelfile, allowing teams to version control their LLM infrastructure just as they would a containerized application. It supports the latest state-of-the-art models like Gemma 3, DeepSeek-R1, and Llama 3.3 immediately upon release, making it the de-facto standard for local AI development.

🏗️ Architecture Breakdown

Ollama is built primarily in Go (Golang), chosen likely for its ability to produce static binaries that are easy to distribute across operating systems without external runtime dependencies.

Core Components:

- The Server (

ollama serve): This is a long-running HTTP server that manages the lifecycle of loaded models. It handles the loading of model weights into VRAM/RAM and unloads them when idle to conserve resources. It exposes port11434by default. - The Inference Engine: Under the hood, Ollama acts as a high-level wrapper around llama.cpp. It leverages the GGUF file format, which is optimized for fast loading and mapping to memory. This allows Ollama to run quantized models (e.g., 4-bit quantization) with minimal performance loss, making 70B+ parameter models runnable on consumer hardware.

- The Modelfile: Adopting a design pattern similar to Docker’s

Dockerfile, theModelfiledefines the model’s base image (e.g.,FROM llama3.2), parameters (temperature, context window), and system prompts. This declarative approach allows for reproducible builds of “model personalities.” - API Layer: The architecture exposes a clean REST API (

/api/generate,/api/chat,/api/embeddings). This decouples the inference logic from the application logic, allowing frontend applications (like the many WebUIs listed in the ecosystem) to interact with the model via standard JSON payloads.

Key Design Patterns:

- Adapter Pattern: It adapts various hardware backends (Metal, CUDA) into a unified inference interface.

- Client-Server: The CLI tool (

ollama) is merely a client that communicates with the local server instance. - Lazy Loading: Models are not loaded until a request is made, and the architecture supports keeping the model in memory for a configurable duration to reduce latency on subsequent requests.

🚀 Quick Start

To get this running, we will bypass the desktop installers and use the CLI for a headless server setup, which is more representative of a production or dev-server environment.

1. Installation (Linux/macOS)

# Standard installation script (inspect before running in prod)

curl -fsSL https://ollama.com/install.sh | sh

# Verify installation and start the server

ollama serve &2. Running a Model

Pull and run Gemma 3 (4B parameters), a highly capable efficient model.

# Pull the model (downloads weights to local registry)

ollama pull gemma3

# Run interactive mode

ollama run gemma33. Creating a Custom Model (The “Modelfile” Workflow)

This is where Ollama shines. We will create a specialized “DevOps Assistant” model.

Create a file named Modelfile:

FROM gemma3

# Set parameters for more deterministic output

PARAMETER temperature 0.3

PARAMETER num_ctx 4096

# Define the system persona

SYSTEM """

You are a Senior Site Reliability Engineer.

Answer questions with brevity and focus on Linux command line solutions.

Do not provide explanations unless asked.

"""Build and run the custom model:

# Build the model image

ollama create sre-bot -f Modelfile

# Test the API endpoint

curl http://localhost:11434/api/generate -d '{

"model": "sre-bot",

"prompt": "How do I find the process using port 8080?",

"stream": false

}'Output:

{

"model": "sre-bot",

"response": "lsof -i :8080",

"done": true,

...

}⚖️ The Verdict: Production Readiness

Ollama has matured from a developer tool into a viable component for edge and on-premise deployments. However, “Production Ready” depends heavily on your definition of production.

| Criteria | Score | Notes |

|---|---|---|

| Stability | 9/10 | The Go runtime is rock solid. Crashing is rare, and it handles OOM (Out of Memory) scenarios gracefully by falling back to CPU or rejecting requests. |

| Performance | 8/10 | Excellent for single-user or low-concurrency use cases. It leverages quantization effectively. However, it lacks the continuous batching throughput of engines like vLLM or TGI for high-traffic serving. |

| DevEx | 10/10 | Unmatched. The Modelfile abstraction and CLI make it the easiest tool to use in the ecosystem. |

| Enterprise Ready | 7/10 | It lacks built-in authentication, rate limiting, and advanced observability (metrics) out of the box. You must put a reverse proxy (Nginx/Traefik) in front of it for secure deployment. |

The Architect’s View

Ollama is the gold standard for local development and edge inference. If you are building an internal tool, a desktop application, or a service with moderate traffic that runs on-premise hardware, Ollama is the correct choice.

However, if you are building a public-facing SaaS expecting thousands of concurrent requests, Ollama is not the specialized inference server you need. In that scenario, it serves better as the development environment that mirrors the production models, while production might run on vLLM or optimized cloud endpoints.

💼 Who Should Use This?

- Internal Tooling Teams: For building “Chat with your Docs” apps or coding assistants hosted on an internal server.

- Edge Computing Architects: Deploying LLMs to retail stores, factories, or offline environments where internet connectivity is spotty.

- App Developers: Those integrating AI into desktop apps (Electron, Swift, etc.) where bundling the runtime is necessary.

- R&D Teams: Rapidly prototyping prompts and model configurations using the

Modelfilesystem before committing to heavy infrastructure.

Who should look elsewhere?

- High-frequency trading or real-time bidding systems requiring sub-millisecond latency.

- Public SaaS platforms serving massive concurrent user bases (look at vLLM or TGI).

Need help deploying ollama in your stack? Hire the Architect →

Recommended Reads

Is YuPi AI Guide Production Ready? Deep Dive & Setup Guide

Technical analysis of YuPi AI Guide. Architecture review, deployment guide, and production-readiness verdict. 2.7k stars.

Is Deepnote Production Ready? Deep Dive & Setup Guide

Technical analysis of Deepnote's open-source ecosystem. Architecture review of the reactivity engine, file format, and conversion tools. 2.5k stars.

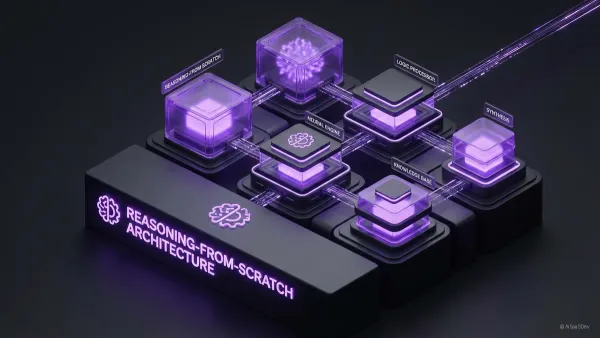

Is Reasoning From Scratch Production Ready? Deep Dive & Setup Guide

Technical analysis of Reasoning From Scratch. Architecture review, deployment guide, and production-readiness verdict. 2.4k stars.