Is MiniMind Production Ready? Deep Dive & Setup Guide

Technical analysis of minimind. Architecture review, deployment guide, and production-readiness verdict. 35953 stars.

Is MiniMind Production Ready?

MiniMind is trending with 35,953 stars because it dismantles the “black box” nature of Large Language Models. While the industry races toward trillion-parameter models, MiniMind goes the opposite direction: a 26M parameter model built from scratch in pure PyTorch.

It solves the Barrier to Entry problem. Most engineers rely on high-level abstractions like HuggingFace transformers or trl without understanding the underlying tensor operations. Minimind provides a complete, white-box implementation of the entire LLM lifecycle-Pretrain, SFT, LoRA, and RLHF (including PPO, DPO, and GRPO)-trainable on a single consumer GPU (RTX 3090) in under 2 hours for less than $3.

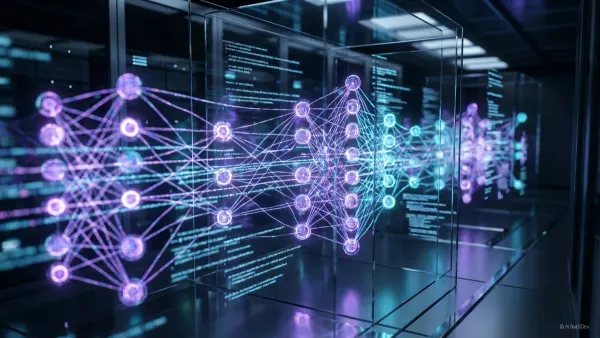

🛠️ Architecture Deep Dive

Minimind is not a wrapper; it is a pedagogical re-implementation of state-of-the-art LLM architectures.

- Core Framework: Pure PyTorch. It deliberately avoids

transformersabstractions for the model definition to expose the raw matrix multiplications and attention mechanisms. - Model Topology:

- Base: Llama-style architecture utilizing RMSNorm, RoPE (Rotary Positional Embeddings), and SwiGLU activation functions.

- MoE Support: Includes a Mixture-of-Experts variant, demonstrating sparse activation patterns on a micro-scale.

- Tokenizer: Custom trained tokenizer with a small vocabulary (~6,400 tokens) to prevent the embedding layer from dominating the parameter count of the 26M model.

- Training Pipeline:

- Pre-training: Causal Language Modeling (CLM) on cleaned datasets.

- SFT: Supervised Fine-Tuning for instruction following.

- RLHF/RLAIF: Native implementations of PPO (Proximal Policy Optimization), DPO (Direct Preference Optimization), and the cutting-edge GRPO (Group Relative Policy Optimization) used in reasoning models like DeepSeek-R1.

- Distillation: Includes code to distill knowledge from larger models (like DeepSeek-R1) into the minimind architecture.

- Inference & Export:

- Supports native PyTorch inference.

- Full compatibility layers for llama.cpp (GGUF export), vLLM, and Ollama.

🚀 Quick Start

The following guide sets up the environment and runs inference using a pre-trained model.

1. Setup Environment

# Clone the repository

git clone https://github.com/jingyaogong/minimind

cd minimind

# Install dependencies (Requires PyTorch with CUDA support)

pip install -r requirements.txt2. Download Weights & Run Inference

Minimind provides pre-trained weights so you don’t have to train from scratch to test it.

# Download the MiniMind2 model (approx 100MB)

git clone https://huggingface.co/jingyaogong/MiniMind2

# Run the evaluation script

# This script loads the model and initiates a CLI chat session

python eval_llm.py --load_from ./MiniMind23. (Optional) Start Training

To experience the “2-hour training” promise:

# Pre-training phase

python train_pretrain.py

# SFT phase (after pre-training)

python train_full_sft.py⚖️ The Verdict

Is minimind Production Ready?

For Application Deployment: No. A 26M parameter model (or even the 100M variant) is functionally a “toy” compared to 7B+ parameter models. It will hallucinate frequently, lacks deep reasoning capabilities, and has a limited context window. It is not suitable for customer-facing chatbots or complex RAG systems.

For R&D and Education: Yes, Enterprise-Grade. This is one of the best resources available for Systems Architects and ML Engineers to understand the mechanics of LLMs.

- Algorithm Verification: Excellent for testing new RLHF algorithms (like GRPO) on a small scale before scaling to clusters.

- Hardware Profiling: Useful for understanding memory bandwidth and compute bound operations in Transformer architectures without waiting days for convergence.

Recommendation: Use minimind to upskill your team on LLM internals or to prototype architectural changes. Do not deploy the model weights to production.

Recommended Reads

Is YuPi AI Guide Production Ready? Deep Dive & Setup Guide

Technical analysis of YuPi AI Guide. Architecture review, deployment guide, and production-readiness verdict. 2.7k stars.

Is Deepnote Production Ready? Deep Dive & Setup Guide

Technical analysis of Deepnote's open-source ecosystem. Architecture review of the reactivity engine, file format, and conversion tools. 2.5k stars.

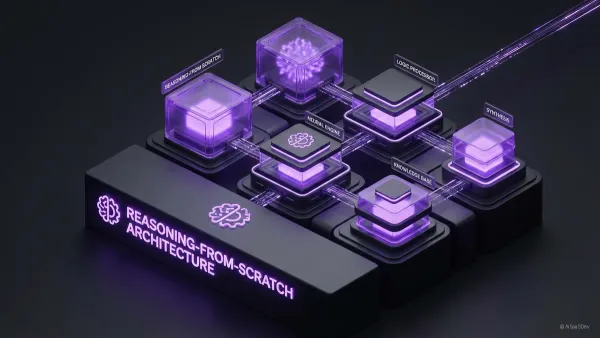

Is Reasoning From Scratch Production Ready? Deep Dive & Setup Guide

Technical analysis of Reasoning From Scratch. Architecture review, deployment guide, and production-readiness verdict. 2.4k stars.