Is LangChain Production Ready? Deep Dive & Setup Guide

Technical analysis of langchain. Architecture review, deployment guide, and production-readiness verdict for CTOs and Founders.

LangChain is trending with 122.5k stars. But is it ready for production? Here’s the architectural breakdown.

🛠️ What is LangChain?

LangChain has effectively established itself as the “operating system” for Large Language Model (LLM) application development. In the early days of the generative AI boom, developers were manually stitching together HTTP requests to OpenAI’s API, handling retries, context window management, and prompt formatting in raw Python strings. LangChain emerged to solve this fragmentation by providing a standardized interface for the entire lifecycle of an AI application.

At its core, LangChain is a framework that abstracts the complexity of integrating LLMs with external computation and data. It treats language models not just as text generators, but as reasoning engines that can be “chained” together with other components. This solves the critical problem of Model Interoperability; code written for OpenAI can be switched to Anthropic or a local Llama 3 instance with minimal refactoring.

The framework is gaining massive traction because it addresses the “blank page” problem. It provides pre-built implementations for Retrieval Augmented Generation (RAG), structured output parsing, and agentic workflows. With the recent introduction of LangGraph, the ecosystem has pivoted from simple linear chains (Input A → Output B) to complex, cyclic state graphs, enabling the creation of reliable, long-running autonomous agents that can loop, retry, and maintain memory over time.

🏗️ Architecture Breakdown

The LangChain architecture is built on a modular, component-based design pattern that emphasizes composability. It relies heavily on Python’s Pydantic for data validation and schema definition, ensuring that inputs and outputs between disparate AI components remain type-safe.

Core Components and Stack

-

The Interface Layer (LangChain Core): This is the base abstraction. It defines the standard

Runnableprotocol. Almost everything in LangChain-prompts, models, retrievers-implements this protocol, allowing them to be piped together using the LangChain Expression Language (LCEL). This declarative approach (similar to Unix pipes) allows for readable and concise chain definitions. -

Model I/O: LangChain decouples the application logic from the model provider. It uses a Factory pattern to instantiate models (LLMs or Chat Models) from providers like OpenAI, Hugging Face, or Cohere. This layer handles rate limiting, async execution, and token counting automatically.

-

Retrieval Architecture (RAG): For data augmentation, LangChain implements a comprehensive pipeline:

- Document Loaders: Adapters for PDF, HTML, CSV, Notion, etc.

- Text Splitters: Algorithms to chunk text semantically to fit context windows.

- Vector Stores: Integrations with Pinecone, Milvus, Chroma, and PGVector to store embeddings.

- Retrievers: The logic that queries the vector store (e.g., similarity search, MMR).

-

Agent Orchestration (LangGraph): While the core library handles direct sequences, the ecosystem now pushes complex logic to LangGraph. This is a shift from the legacy

AgentExecutor. LangGraph models agent workflows as a state machine (nodes and edges), allowing for cyclic execution (loops), human-in-the-loop breakpoints, and persistent state storage. This is crucial for building agents that need to “think,” fail, correct themselves, and try again. -

Observability (LangSmith): Production AI differs from standard software because “unit tests” are non-deterministic. LangChain integrates natively with LangSmith to trace execution steps, log token usage, and evaluate model outputs against datasets, providing the necessary telemetry for enterprise deployment.

🚀 Quick Start

To get a functional LangChain setup running, you need to install the core library and a partner package (usually for the model provider, e.g., OpenAI). This guide assumes you have a Python environment ready.

1. Installation

# Install core and openai integration

pip install langchain langchain-openai

# Optional: Install LangGraph for agent workflows

pip install langgraph2. Implementation: A Basic RAG Chain

This example demonstrates the modern LCEL (LangChain Expression Language) syntax. We will create a simple chain that takes a topic, formats it into a prompt, and streams the response.

import os

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

# Ensure your API key is set

# os.environ["OPENAI_API_KEY"] = "sk-..."

# 1. Initialize the Model

# We use a standard chat model. Temperature 0 makes it more deterministic.

model = ChatOpenAI(model="gpt-4o", temperature=0)

# 2. Define the Prompt Template

# This abstracts the specific formatting required by the model (System vs User roles)

prompt = ChatPromptTemplate.from_messages([

("system", "You are a senior backend engineer. Answer technically and concisely."),

("user", "{topic}")

])

# 3. Define the Output Parser

# Converts the raw AI Message object back into a clean string

parser = StrOutputParser()

# 4. Create the Chain using LCEL (The Pipe Syntax)

# This is the signature architecture of LangChain:

# Prompt -> Model -> Parser

chain = prompt | model | parser

# 5. Execute

print("--- Invoking Chain ---")

response = chain.invoke({"topic": "Explain the difference between TCP and UDP"})

print(response)

# 6. Streaming Example (Crucial for UX)

print("\n--- Streaming Response ---")

for chunk in chain.stream({"topic": "Explain Docker in one sentence"}):

print(chunk, end="", flush=True)Contextual Note

The | operator in the code above is not standard Python bitwise OR; LangChain overrides this operator to create a RunnableSequence. This allows you to chain any component that adheres to the Runnable interface, making the code look like a functional pipeline.

⚖️ The Verdict: Production Readiness

LangChain has matured significantly, moving from a chaotic collection of scripts to a structured framework. However, its rapid evolution brings both power and complexity.

| Criteria | Score | Notes |

|---|---|---|

| Stability | 8/10 | The core API (v0.1+) is stable. However, integrations (community packages) can vary in quality. Breaking changes are less frequent now but still occur in edge cases. |

| Documentation | 7/10 | Massive and comprehensive, but suffers from “version fragmentation.” You will often find tutorials for the legacy Chain classes that are now deprecated in favor of LCEL and LangGraph. |

| Community | 10/10 | Unrivaled. If you have an error, someone else has already solved it on GitHub or Discord. The ecosystem of third-party tools is vast. |

| Enterprise Ready | 9/10 | With the addition of LangSmith for observability and LangGraph for state management, it is ready for high-scale deployment. Major companies (LinkedIn, Uber) use components of this stack. |

The “Abstraction” Caveat

While LangChain is production-ready, it introduces a significant layer of abstraction. For simple API calls, it can feel like bloat. Its true value shines in complex workflows involving RAG, memory, and tool usage. If you are building a simple chatbot, raw API calls might be cleaner. If you are building an autonomous research agent, LangChain (specifically LangGraph) is essential.

💼 Who Should Use This?

✅ Ideal For:

- Enterprise Engineering Teams: Who need a standardized way to build AI apps across different departments without reinventing the wheel.

- Startups & Prototypers: The speed at which you can swap models (e.g., testing Gemini vs. GPT-4) and integrate vector stores allows for incredibly fast iteration cycles.

- Complex Agent Builders: If your app requires the AI to use tools (search, calculators, APIs) and maintain state, the LangGraph component is currently the industry standard.

- Python/JS Shops: With parity between Python and TypeScript libraries, it fits into most modern web stacks.

❌ Skip It If:

- You need extreme optimization: If you are counting every millisecond of latency, the overhead of the abstraction layer might be undesirable.

- You are building a custom training loop: LangChain is for inference orchestration, not for training PyTorch models.

- You want zero dependencies: LangChain pulls in a significant number of dependencies. For a simple script, use the vendor SDKs directly.

Need help deploying langchain in your stack? Hire the Architect →

Recommended Reads

Is YuPi AI Guide Production Ready? Deep Dive & Setup Guide

Technical analysis of YuPi AI Guide. Architecture review, deployment guide, and production-readiness verdict. 2.7k stars.

Is Deepnote Production Ready? Deep Dive & Setup Guide

Technical analysis of Deepnote's open-source ecosystem. Architecture review of the reactivity engine, file format, and conversion tools. 2.5k stars.

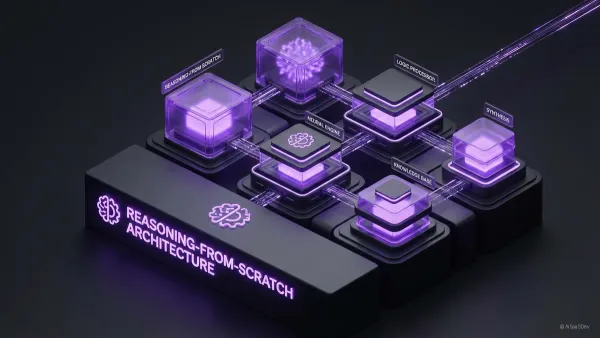

Is Reasoning From Scratch Production Ready? Deep Dive & Setup Guide

Technical analysis of Reasoning From Scratch. Architecture review, deployment guide, and production-readiness verdict. 2.4k stars.