Is Hands-On LLMs Production Ready? Deep Dive & Setup Guide

Technical analysis of Hands-On LLMs. Architecture review, deployment guide, and production-readiness verdict. 18.9k stars.

Hands-On LLMs is trending with 18.9k stars. Here is the architectural breakdown.

🛠️ What is it?

Hands-On LLMs is the official code companion for the O’Reilly book Hands-On Large Language Models, authored by industry heavyweights Jay Alammar (famous for “The Illustrated Transformer”) and Maarten Grootendorst (creator of BERTopic).

Unlike typical “awesome-lists” or theoretical papers, this repository serves as a pragmatic, code-first implementation guide for the entire LLM lifecycle. It is a collection of optimized Jupyter Notebooks that translate complex Transformer theory into executable Python code. It moves beyond simple API calls, teaching developers how to build, fine-tune, and deploy systems using the modern Python AI stack.

Key technical domains covered include:

- Tokenization & Embeddings: Deep dives into how machines interpret text numerically.

- Transformer Architecture: Inspecting attention mechanisms and hidden states.

- RAG (Retrieval-Augmented Generation): Building semantic search engines using vector databases.

- Fine-Tuning: Implementing PEFT (Parameter-Efficient Fine-Tuning) and LoRA on consumer hardware.

- Multimodality: Working with models that process image and text simultaneously.

🏗️ Architecture & Stack

This is not a monolithic application but a modular educational framework. The architecture is designed to run primarily on Google Colab (Free Tier T4 GPUs), making it accessible without enterprise infrastructure.

The stack relies heavily on the Hugging Face Ecosystem:

- Orchestration:

Jupyter Notebooks(IPYNB) serve as the interface. - Core Framework:

PyTorchis the underlying tensor library. - Model Abstraction:

transformers(Hugging Face) is used for loading pre-trained models (BERT, GPT, Llama). - Vector Operations:

sentence-transformersprovides state-of-the-art embedding generation. - Data Processing:

pandasanddatasetshandle corpus management. - Visualization: Custom visualization utilities (unique to this repo) help debug attention heads and embedding clusters.

🚀 Quick Start

While the repository is designed to be cloned and run in a notebook environment, here is a consolidated snippet demonstrating the core workflow: loading a model and generating text using the pipeline approach advocated in the early chapters.

1. Clone the repo:

git clone https://github.com/HandsOnLLM/Hands-On-Large-Language-Models

cd Hands-On-Large-Language-Models

pip install transformers torch sentence-transformers2. Basic Inference (Python Script):

import torch

from transformers import pipeline

# 1. Initialize a text-generation pipeline

# The repo teaches using 'device_map="auto"' for efficient GPU usage

generator = pipeline(

"text-generation",

model="gpt2",

device_map="auto",

torch_dtype=torch.float16 if torch.cuda.is_available() else torch.float32

)

# 2. Generate text

prompt = "The future of Large Language Models is"

output = generator(prompt, max_length=50, num_return_sequences=1)

print(f"Input: {prompt}")

print(f"Output: {output[0]['generated_text']}")

# 3. Example of Embedding (Chapter 2 concept)

from sentence_transformers import SentenceTransformer

embedder = SentenceTransformer('all-MiniLM-L6-v2')

embeddings = embedder.encode(["This is a sentence.", "This is another one."])

print(f"\nEmbedding Shape: {embeddings.shape}")⚖️ The Verdict

Hands-On LLMs is an Educational Resource, not a production library. You would not deploy this repo directly to a server. However, the code patterns contained within are Production Grade.

This is widely considered the “Gold Standard” reference for engineers transitioning from traditional software dev to AI engineering.

- Stability: High. The notebooks are rigorously tested on Google Colab.

- Relevance: Extremely high. It covers current meta-strategies like RAG and Fine-tuning rather than just prompt engineering.

- Visuals: The repository includes unique helper functions that visualize how data moves through a Transformer, which is invaluable for debugging.

Architect’s Note: If you are building an LLM product, clone this repo to understand how to implement the features, then copy the specific logic (e.g., the semantic search implementation in Chapter 8) into your own FastAPI or Flask application.

Recommended Reads

Is YuPi AI Guide Production Ready? Deep Dive & Setup Guide

Technical analysis of YuPi AI Guide. Architecture review, deployment guide, and production-readiness verdict. 2.7k stars.

Is Deepnote Production Ready? Deep Dive & Setup Guide

Technical analysis of Deepnote's open-source ecosystem. Architecture review of the reactivity engine, file format, and conversion tools. 2.5k stars.

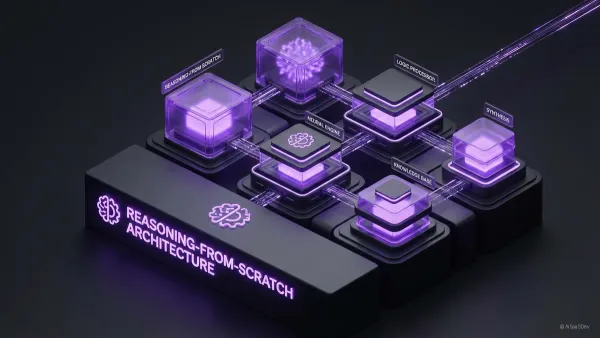

Is Reasoning From Scratch Production Ready? Deep Dive & Setup Guide

Technical analysis of Reasoning From Scratch. Architecture review, deployment guide, and production-readiness verdict. 2.4k stars.