Is Kodezi Chronos Production Ready? Deep Dive & Setup Guide

Technical analysis of Kodezi Chronos. Architecture review, deployment guide, and production-readiness verdict. 5.3k stars.

Kodezi Chronos is trending with 5.3k stars. Here is the architectural breakdown.

🛠️ What is it?

Kodezi Chronos is a “debugging-first” language model architecture designed specifically to solve the “Debugging Gap”-the massive disparity between an LLM’s ability to generate new code (high) versus its ability to fix existing bugs (historically low).

While this repository is primarily a research release containing benchmarks and evaluation frameworks rather than the raw model weights, it documents the architecture that achieved a staggering 80.33% on SWE-bench Lite, completely eclipsing general-purpose models like GPT-4.1 (~13.8%) and Claude 4.5 Sonnet (~14% on debugging tasks).

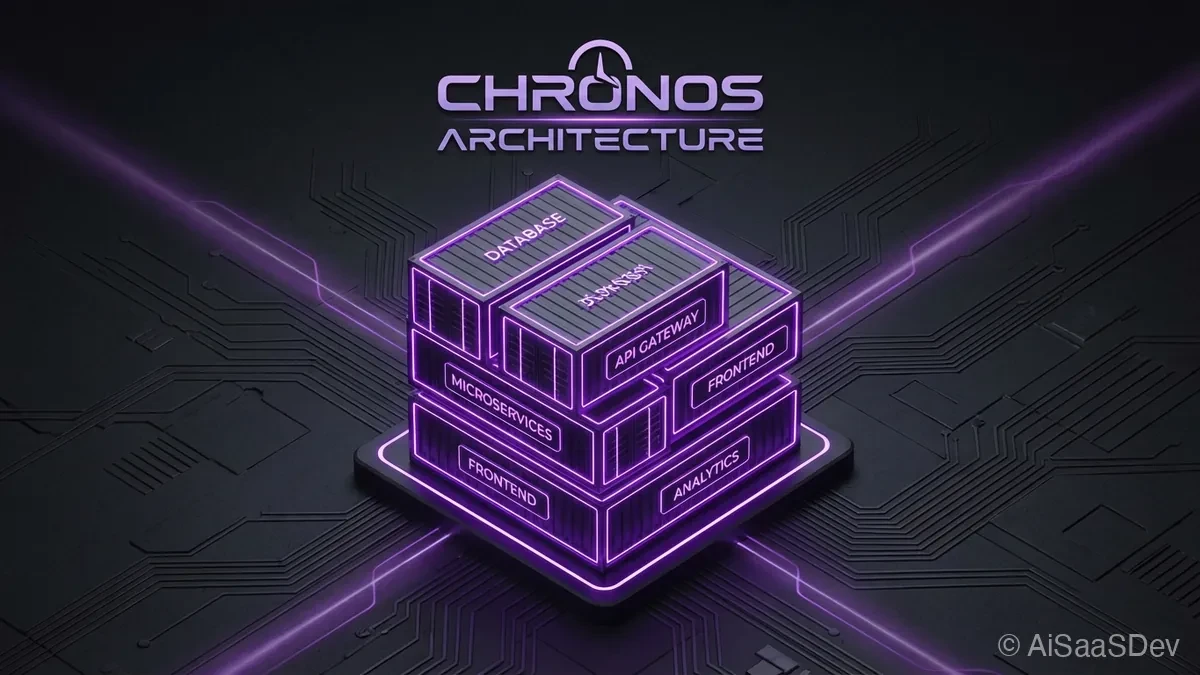

The system is built on a Seven-Layer Architecture that moves beyond simple RAG:

- Adaptive Graph-Guided Retrieval (AGR): Instead of vector similarity, it uses dynamic k-hop graph traversal to navigate codebases. It achieves 92% precision by understanding the semantic relationships between files (e.g., imports, class inheritance) rather than just text matching.

- Persistent Debug Memory (PDM): A repository-specific learning layer that “remembers” past debugging sessions. It improves success rates from 35% to 65% over time by caching successful fix patterns for a specific codebase.

- Orchestration Controller: Manages an autonomous

Propose -> Test -> Analyze -> Refineloop, executing fixes in a sandbox environment and iterating an average of 7.8 times before finalizing a patch.

🚀 Quick Start

Note: The Chronos model itself is proprietary (accessed via Kodezi OS). This repository allows you to run the Multi-Random Retrieval (MRR) benchmark to evaluate model performance against the Chronos standards.

Prerequisites

- Python 3.8+

- Git

Running the Benchmark

To test how your current models compare against the Chronos baselines using the MRR benchmark:

# 1. Clone the research repository

git clone https://github.com/Kodezi/Chronos

cd Chronos

# 2. Install dependencies

pip install -r requirements.txt

# 3. Run the MRR Benchmark (Sample subset)

# This simulates 100 debugging scenarios with scattered context

python benchmarks/run_mrr_benchmark_2025.py \

--model gpt-4 \

--scenarios 100

# 4. Analyze the results

# Generates a report on retrieval precision and root cause accuracy

python benchmarks/analyze_results.py \

--results_dir results/gpt-4⚖️ The Verdict

Kodezi Chronos represents a significant leap in autonomous software engineering, shifting the focus from “Copilots” that write code to “Agents” that fix it.

- Production Readiness: ⚠️ Research/Proprietary. The repository itself is a benchmark suite, not a deployable tool. The actual engine is locked behind the Kodezi OS platform (Beta Q4 2025, GA Q1 2026).

- Use Case: Use this repository to benchmark other agents or to understand the state-of-the-art architecture for building your own debugging tools.

- Stability: The benchmark suite is stable, but the “product” is a managed service.

If you are building AI dev tools, the AGR and PDM concepts detailed here are the new gold standard for architecture. If you just want to fix bugs, you must wait for the commercial release.

Recommended Reads

Is YuPi AI Guide Production Ready? Deep Dive & Setup Guide

Technical analysis of YuPi AI Guide. Architecture review, deployment guide, and production-readiness verdict. 2.7k stars.

Is Deepnote Production Ready? Deep Dive & Setup Guide

Technical analysis of Deepnote's open-source ecosystem. Architecture review of the reactivity engine, file format, and conversion tools. 2.5k stars.

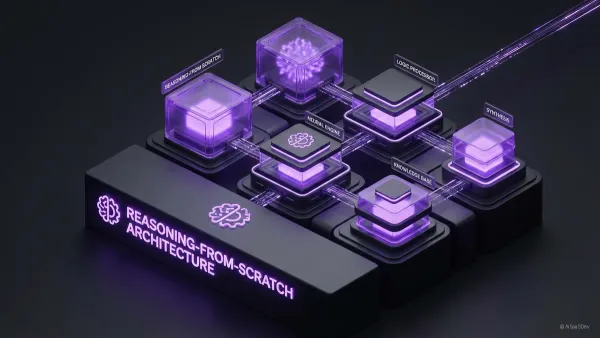

Is Reasoning From Scratch Production Ready? Deep Dive & Setup Guide

Technical analysis of Reasoning From Scratch. Architecture review, deployment guide, and production-readiness verdict. 2.4k stars.